Thursday, December 31, 2009

Types of Data Models

This post talks about the different types of data models we create when working with databases. The different data models include:

Conceptual Data Model

In this model, an Entity Relationship Diagram (ERD) is created. This diagram identifies all the entities in the system. In addition to it, it also defines the relationship between these entities.

Logical Data Model

In this model, the data is normalized. Normalization is the process of converting complex data structure into simple one. In this model, data is arranged properly in tables and Primary Keys (identifiers) are identified. Also relationships (based on ERD) is defined amongst tables.

Physical Data Model

In this model, the Logical Model is mapped to the target database technology. The schema is defined which includes the attributes, primary keys, forign keys and relationships.

Hope this helps. Stay tuned for more...

Windows SharePoint Services vs Microsoft Office SharePoint Server

In this post, I will try to explain the difference between Windows Sharepoint Services (WSS) and Microsoft Office Sharepoint Server. People new to Sharepoint tend to use both terms interchangeably. However, these are two different products (although they are inter-related). In the following section, we will see the difference between the two.

Windows Sharepoint Services (WSS)

WSS is a part of Windows Server Technology including Server 2003 and 2008. It has to be installed separately and requires IIS 6.0+ and .NET 3.0 framework. After you have installed WSS, you have access to the following features:

• Sites, Lists

• Blogs, RSS Feeds

• Alerts, Workflows

• Creating Team Sites

• Document Management

The WSS also integrates with other Microsoft Office products including Outlook, Excel etc.

Microsoft Office Sharepoint Server (MOSS)

MOSS is a separate product offered under the Microsoft Office Umbrella. We have to purchase a separate license for this product. In addition to features provided by WSS, MOSS offers the following additional features:

• Business Intelligence

• Business Data Catalog

• Business Data Web Parts

• MySites (customizable personal sites for users)

• Advanced Workflows

• Policies

• Content Authoring

You can download the above two products from the following links:

WSS with SP

MOSS 2007 Trial Version with SP2

Stay tuned for more...

Monday, December 28, 2009

Windows Vista SP2 to the rescue

If you are one of Windows Vista users and have been pulling your hair due to performance issue then you should install Windows Vista SP2 immediately. You can download it from the following link:

Windows Vista SP2

I installed it recently, stopped a few Startup services and have stopped complaining about Vista ever since (Good work Microsoft - a bit late though)

Thursday, December 24, 2009

Running ASP.NET 2.0 under IIS 7.0

In this post, I will share my experience of running ASP.NET 2.0 applications under IIS 7.0. If you run an ASP.NET 2.0 application under IIS 7.0, you will get a 500 – Internal Server Error. This will occur since IIS 7.0 has got a new enhanced architecture.

In earlier versions of IIS (6.0 and below), asp.net applications run under IIS through ISAPI extensions. These extensions have their own processing model and every request must go through this model (or pipeline). In addition to this pipeline, an asp.net application has its own request pipeline (handlers) which processes user requests. This results in two pipelines being exposed. One for ISAPI extensions and other for managed applications.

IIS 7.0 merges the two pipelines into one Integrated Managed Pipeline which handles both native and managed request. This provides added features to the developers. For example, many of .NET features such as Authentication, Authorization can be applied to images, scripts and other files which may come under ISAPI extensions. Similarly, URL Rewriting is powerful feature added to IIS 7.0 to help write SEO URLs.

Due to the above mentioned architecture, asp.net 2.0 applications do not run under IIS 7.0 out of the box. IIS will give a 500 – Internal Server Error when trying to browse the application. The solution to fix this problem is described in the following sections.

Change settings in web.config file

In this solution, we have to change a few settings in the web.config file. This is done by doing the following steps:

• Move entries in <system.web>/<httpModules> to <system.webServer>/<modules>

• Move entries in <system.web>/<httpHandlers> to <system.webServer>/<handlers>

In addition to this, also add the following entry under the <system.web> section:

<system.webServer>

<validation validateIntegratedModeConfiguration="false" />

</system.webServer>

Change Application Pools setting in IIS

This solution is easier to follow however; this applies to all applications running under IIS and hence prevents from taking advantages of the new IIS features. In this case changes are made in IIS Manager by the following steps:

Start IIS Manager and select ‘Applications Pool’.

In the top right corner, click on ‘Set Application Pool Defaults’. This will display the Application Pool Defaults dialog.

For the ‘Managed Pipeline Mode’ property, select ‘Classic’ and close the dialog.

Now when you browse your asp.net 2.0 application, you must be able to run it successfully. Stay tuned for more.

XCopy and Insufficient Memory Error

Recently I came across an interesting situation pertaining to XCopy so I thought of sharing my experience with you. As part of my daily backup procedure, I use the MS-DOS based XCopy command in a .bat file (scheduled) to copy over a large number of files from one machine to the other. The XCopy command has been working fine ever since until recently I noticed that the entire data was not copied over.

I ran the .bat file manually to see what was going on. After some time, the command window displayed the following message:

Insufficient Memory…

I was using a powerful machine and memory (RAM) was not an issue. After doing some googling, I finally figured out the source of the problem which had nothing to do (at least in my case) with having enough memory. The problem was a long file name which didn’t allow the XCopy command to run to completion. You must be surprised but read on.

The XCopy command fails to handle a fully qualified filename (filename + path) which is greater than 254 characters (which is by the way also the maximum path length allowed by Microsoft OS). The message Insufficient Memory… is very misleading and doesn’t reveal the actual problem.

I went ahead to shorten the name of a few files which fixed the problem. But if this is not the desired solution for you then you can look into using XXCopy (commercial) or Robocopy (free - Microsoft). Although I haven’t used any of these, I have seen some people complaining about XXCopy still not doing the job. Robocopy on the other hand, seem to be a suitable solution. So you can try your luck with that (if you don’t want to shorten the filename :- ).

Stay tuned for more…

Friday, December 18, 2009

IIS 7.0 and HTTP Errors

Recently I have been working with Internet Information Server (IIS) 7.0 and got into a few issues (obviously due to my lack of knowledge :-). I configured an ‘Application’ under the ‘Default Web Site’ but was unable to run it. Adding to it, I only got the following error message which gave no clue of what the problem was:

HTTP Error 500 - Internal Server Error

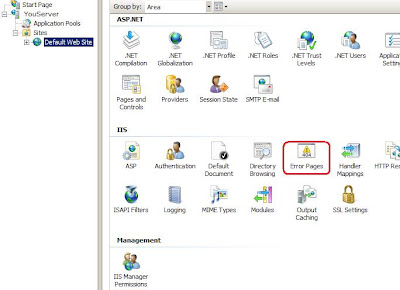

I unchecked the Show friendly HTTP error messages option in Internet Explorer (you can do so in IE by going to Tools -> Internet Options -> Advanced (tab) -> under Browsing) but to my frustration, this time I got a completely blank screen (no error at all :-). After doing some research, it dawned on me that I had not turned on the HTTP Error feature when I installed IIS 7.0. So I went ahead to install this feature (it is found under Web Server -> Common IIS Features -> HTTP Features -> HTTP Error when installing IIS). With this feature installed, you can see an Error Pages (shown below) icon in IIS Manager.

After turning on the HTTP Error feature, there is one more step needed before you can see the proper error message. In IIS Manager, click on Default Web Site and select the Error Pages feature as shown below:

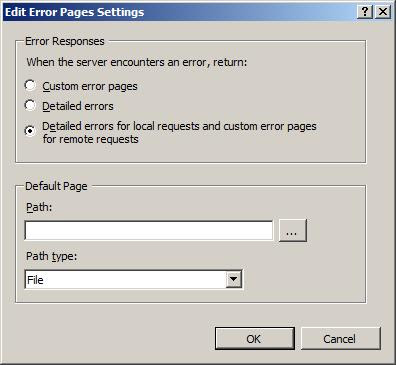

This will bring you to another screen where you click on Edit Features Settings as shown below:

Next you will see a Edit Error Pages Settings dialog box. Make sure the option Detailed errors for local requests and custom error pages for remote request option is selected as shown below:

Now when I browsed my application, I was delighted to see the error message (had to fix it though :-) as shown below:

Detailed vs. Custom Errors

IIS 7.0 either displays a Custom or a Detailed error message based on the type of request. A ‘Custom Message’ is displayed to a client accessing the website from a remote location. This is a short user-friendly message which doesn’t reveal any information pertaining to the website.

A Detailed error message, on the other hand, is displayed when browsing locally (the same machine). Usually the developer or the admin would need to do that for debugging purpose. This type of error message is more verbose and informative which helps in debugging the application. A detailed message would look like the one shown above.

Hope this was useful. Stay tuned for more...

Thursday, November 26, 2009

LINQ Explained - Index

For your convenience, I am adding the following index of my on going series on Language Integrated Query or LINQ.

LINQ Explained - 1: Introduction to LINQ

LINQ Explained - 2: Features used by LINQ

LINQ Explained - 3: Some more features used by LINQ

LINQ Explained - 4: LINQ Syntax

LINQ Explained - 5: LINQ to SQL

LINQ Explained - 6: LINQ to Objects

LINQ Explained - 7: LINQ to XML

LINQ Explained – Part 4

This is the fourth part of my on-going series on Language Integrated Query or LINQ. I have been away from this series for a while. However my following posts (including this one) are aimed at completing this series. In the second and third posts, we had an overview of the features which are important to understand to have a full grasp of LINQ. In this post, we will have a detailed look at LINQ syntax and explore its different features. So let us get started.

Introduction

LINQ is a set of language extensions added to C# and VB.NET which makes queries a first-class concept to these languages. It provides a unified programming model to different data domains for data management. As I mentioned in my first post, using LINQ we can query and operate on different data domains including relational databases, XML, custom entities, DataSets or any third party data source. Above all, the concept of queries is now applicable to in-memory data as opposed to using queries with a persistent medium only. From a developer’s point of view, the interface to each data domain remains the same. But the LINQ engine is responsible for converting the queries to target the domain being referenced.

Since LINQ is a first-class concept in C# and VB.NET, these languages come loaded with support for LINQ. LINQ queries take advantage of features including IntelliSence and compile-time syntax checking. LINQ queries rely on standard query operators (discussed shortly) which are a set of functions used to fetch, parse, sort and filter the data.

Query Expression and Method-Based Queries

A LINQ query is a reminiscent of standard SQL-Query syntax. The purpose of LINQ is to add data querying capabilities to the .NET Framework such that any data-domain can be processed with the same ease. LINQ relies on concepts including Extension Methods, Anonymous Types, Anonymous Methods and Lambda Expressions discussed in earlier posts.

A LINQ Query is also known as Query Expression or Query Syntax. A query expression is a declarative syntax for writing queries which allows us to filter, group and order data. According to MSDN, “a query expression operates on one or more information sources by applying one or more query operators from either the standard query operators or domain-specific operators”. This means that LINQ can operate on different data-domains using operators specific to LINQ or developed by a third party. To me this is a polymorphic behavior of LINQ. The result of a query expression is an in-memory sequence of elements (or objects). Any object which implements the IEnumerable <T> interface is a sequence. The resultant sequence can be iterated through by built-in language iterators.

Let us see a simple example in listing 1 which shows a LINQ Query in action:

Listing 1

int [] prime = new int [8] {1, 3, 5, 7, 11, 13, 17, 19}; // Line 1

var primeNumbers = // Line 2

from p in prime // Line 3

where p > 0 // Line 4

select p; // Line 5

foreach (int p in primeNumbers) // Line 6

{

// use p

}

In the above listing, a list of prime numbers is queried and the result is assigned to a variable primeNumbers. The LINQ Query (line 2–5) resembles a SQL statement with the standard from, where and select clauses. But it is being applied to an in-memory collection of numbers rather than a persistent medium (such as database or XML). Although simple but I am sure you can visualize the strength of LINQ upfront from the above example. The rest of the example is what we talked about (var, foreach) in the previous post.

A query expression can also be represented by a Method-based Query syntax. A method-based query utilizes extension methods and lambda expression. It is a rather concise way of writing query expressions. There is no performance difference between the two. A query expression is more readable while a method-based query is concise to write. It really comes down to your choice of syntax. But do keep in mind that all query expressions are translated into method-based queries. Using method-based query, listing 1 can be written as following:

Listing 2

IEnumerable <int> primeNumbers =

prime

.Where (p => p > 0)

.Select (p => p);

Avid readers must have figured out the reason for applying the foreach loop on the variable primeNumbers in listing 1. This is because the type primeNumbers is converted to IEnumerable <T> which represents a collection of elements. This collection be iterated using foreach loop.

I am sure by now you can spot many of the features explained in part 2 and part 3 of this series. The above queries are just making use of concepts including var keyword, extension methods, lambda expressions and enumerators.

LINQ Syntax

LINQ is a reminiscent of standard SQL Language and thus has a sharp resemblance to it. Like its counterpart, query expression consists of clauses. There are three main clauses in a LINQ expression including from, select and the where clause. The general syntax of a LINQ query is as following:

var [query] = from …

where …

select …

The first clause in a LINQ Query is the from clause. You may be wondering why a LINQ query begins with the from clause unlike a standard SQL query which is preceded by the select clause. The reason for this precedence is to support Intellisence when working with Visual Studio IDE. Since the from clause specifies the data source ahead of the query, the compiler becomes data-source aware and hence supports Intellisence. The from clause is then followed by the where and select clauses. You can find the full syntax of a LINQ Query here .

Let us see listing 3 to analyze a LINQ query piece by piece. We start by defining a simple class followed by object initialization:

Listing 3

public class Car

{

public string Type { get; set; }

public string Color { get; set; }

}

Car[] cars =

{

new Car { Type = "SUV", Color = "Green" },

new Car { Type = "SUV", Color = "Black" },

new Car { Type = "4x4", Color = "Red" },

new Car { Type = "Truck", Color = "Orange" },

new Car { Type = "Jeep", Color = "Black"}

};

Now that we have an array of cars with their properties initialized, we use a LINQ Query to find all the cars with a specific make:

IEnumerable<Car> search =

from myCar in cars

where myCar.Type == "SUV"

select myCar;

The query begins with the from clause. A from clause only operates on sequences implementing the IEnumerable interface. This clause is actually made up of two parts; from and in. The in part points to the source-sequence which must be of type IEnumerable while the from part is a variable used for iterating through the source-sequence.

Next is the where clause used for filtering. Behind the scene, this clause is converted to Where Query Operator which is a member of the Enumerable class. This method accepts a lambda expression as parameter to apply the filter.

Next in the sequence is the select clause. This clause defines an expression which is assigned to a variable. The expression can be of any type including an instance of a class, string, number, boolean etc. Indeed this clause lets a type be created on the fly and assigned to a variable.

Finally, we can iterate through the variable ‘search’ since it is of type IEnumerable using the following code:

foreach (Car c in search)

{

// use c.Type, c.Color

}

Standard Query Operators

So far we have hardly scratched the surface of LINQ syntax and have seen some very simple LINQ queries, but in reality; the discussion of query expressions is incomplete without Standard Query Operators. The standard query operators represent an API defined in the Enumerable and Queryable classes under the System.Linq namespace. These operators are extension methods which accept lambda expressions as argument. These operators operate on sequences where any object which implements the IEnumerable<T> interface qualifies for a sequence. These operators are used to traverse, filter, sort, order and perform various functions on the given data. In other words they provide many of the features of a standard SQL Query including Distinct, Group, Set, Order By, Select etc.

I have stated above that a query expression is converted to a method-based query. In a method-based query, a Clause is converted to its respective Standard Query Operator (an extension method) . For example, the where clause is converted to a Where operator while the select clause is converted to a Select Operator. To keep it simple, just remember that the same clause in a query expression is represented by an operator when converted to a method-based query.

According to LINQ’s official documentation, Standard Query Operators can be categorized into the following:

• Restriction operators

• Projection operators

• Partitioning operators

• Join operators

• Concatenation operator

• Ordering operators

• Grouping operators

• Set operators

• Conversion operators

• Equality operator

• Element operators

• Generation operators

• Aggregate operators

A detailed explanation of each of the above is beyond the scope of this post. However, in the following sections, we will look at some of these operators and their use.

Select / SelectMany – Projection Operators

A Select operator performs a projection over a sequence and returns an object of type IEnumerable<T>. When this object is enumerated, it enumerates through the source sequence and produces one output element for each item in the sequence. The signature of the Select operator is as following:

public static IEnumerable<S> Select<T, S> (

this IEnumerable<T> source,

Func<T, S> selector);

public static IEnumerable<S> Select<T, S> (

this IEnumerable<T> source,

Func<T, int, S> selector);

The first argument of the selector predicate is the source sequence while the selector argument is a zero-based index of elements within the source sequence. I will skip an example for this operator as all the above examples make use of this operator :)

The SelectMany operator is used with nested sequences or in other words sequence of sequences. It merges all the sub-sequences into one single enumerable sequence. The SelectMany operator first enumerates the source sequence and converts its respective sub-sequence into an enumerable object. It then enumerates each element in the enumerable object to form a flat sequence. The operator has the following signature:

public static IEnumerable<S> SelectMany<T, S>(

this IEnumerable<T> source,

Func<T, IEnumerable<S> > selector);

public static IEnumerable<S> SelectMany<T, S>(

this IEnumerable<T> source,

Func<T, int, IEnumerable<S>> selector);

The source is the sequence to be enumerated. The selector predicate represents the function that that is applied to each element in the sequence.

Listing 4 shows the use of the SelectMany operator:

Listing 4

public class Region

{

public int RegionID;

public string RegionName;

public List<Product> Products;

}

public class Product

{

public string ProductCode;

public string ProductName;

}

Now we will initialize a list of type Region with a child object of type Product:

List<Region> products = new List<Region>

{

new Region { RegionID = 1, RegionName = "North",

Products = new List<Product> {

new Product { ProductCode = "EG", ProductName = "Eggs" },

new Product { ProductCode = "OJ", ProductName = "Orange Juice" },

new Product { ProductCode = "BR", ProductName = "Bread" }

}

},

new Region { RegionID = 2, RegionName = "South",

Products = new List<Product> {

new Product { ProductCode = "CR", ProductName = "Cereal" },

new Product { ProductCode = "HO", ProductName = "Honey" },

new Product { ProductCode = "MI", ProductName = "Milk" },

}

},

new Region { RegionID = 3, RegionName = "East",

Products = new List<Product> {

new Product { ProductCode = "SO", ProductName = "Soap" },

new Product { ProductCode = "BS", ProductName = "Biscuits" },

}

}

};

Now we apply the SelectMany operator to select products from the North and East region:

var ProductsByRegion =

products

.Where (p => p.RegionName == "North" || p.RegionName == "East")

.SelectMany (p => p.Products); // using SelectMany operator

foreach (var product in ProductsByRegion)

{

string code, name;

code = product.ProductCode;

name = product.ProductName;

// use code & name

}

The above listing begins by defining two classes, Region and Product. The region class consists of a child collection property Products. Next a list of regions is initialized such that each Region in turn has multiple products. The LINQ Query is applied using the SelectMany operator. This will flat out the sub-lists (products in this case) for the selected regions (North and East) and create a single sequence to be iterated. If you run the above code, you get the following result:

EG: Eggs, OJ: Orange Juice, BR: Bread, SO: Soap, BS: Biscuits

Where– Restriction Operator

The Where operator, also known as restriction operator, filters a sequence based on a condition. The condition is provided as a predicate. The Where operator enumerates the source sequence and yield those elements for which the predicate returns true. We can also use ‘where’ keyword in place of the Where operator. The signature for the Where operator is as follows:

public static IEnumerable<TSource> Where<TSource>(

this IEnumerable<TSource> source,

Func<TSource, bool> predicate);

public static IEnumerable<TSource> Where<TSource>(

this IEnumerable<TSource> source,

Func<TSource, int, bool> predicate);

The source represents the sequence to be enumerated while the predicate defines the condition or filter to be applied on the given sequence.

Join / GroupJoin - Join Operators

The Join operator is a counter part of Inner Join used in SQL Server and serves the same purpose. It returns a sequence of elements from two different sequences with matching keys. This operator has the following signature:

public static IEnumerable<TResult> Join<TOuter, TInner, TKey, TResult> (

this IEnumerable<TOuter> outer,

IEnumerable<TInner> inner,

Func<TOuter, TKey> outerKeySelector,

Func<TInner, TKey> innerKeySelector,

Func<TOuter, TInner, TResult> resultSelector);

public static IEnumerable<TResult> Join<TOuter, TInner, TKey, TResult> (

this IEnumerable<TOuter> outer,

IEnumerable<TInner> inner,

Func<TOuter, TKey> outerKeySelector,

Func<TInner, TKey> innerKeySelector,

Func<TOuter, TInner, TResult> resultSelector,

IEqualityComparer<TKey> comparer);

In the above overloads, outer and inner represent the two source sequences. The predicates outerKeySelector and innerKeySelector represent the keys on which the join will be performed. They should be of the same type. The resultSelector predicate represents the projected result for the final output. In the second overload, we can also use a custom comparer to perform the join between the two sequences based on custom logic.

Listing 5 shows the use of Join operator joins two different lists based on a condition:

Listing 5

public class Developer

{

public string name {get; set; }

public string projecttitle { get; set; }

}

public class Project

{

public string title { get; set; }

public int manDays {get; set; }

public string company { get; set; }

}

List<Developer> developers =

new List<Developer>

{

new Developer { name = "Steven", projecttitle = "ImageProcessing" },

new Developer { name = "Markus", projecttitle = "ImageProcessing" },

new Developer { name = "Matt", projecttitle = "ImageProcessing" },

new Developer { name = "Shaza", projecttitle = "GraphicsLibrary" },

new Developer { name = "Neil", projecttitle = "WebArt" },

};

List<Project> projects =

new List<Project>

{

new Project { title = "ImageProcessing", company = "Future Vision", manDays = 120 },

new Project { title = "DatabaseFusion", company = "Open Space", manDays = 30 },

new Project { title = "GraphicsLibrary", company = "Kid Zone", manDays = 88 },

new Project { title = "WebArt", company = "Web Ideas", manDays = 57 },

new Project { title = "GamingZone", company = "Play with Us", manDays = 50},

};

var ProjectDetails =

from dev in developers

join pro in projects

on dev.projecttitle

equals pro.title

select new

{

Programmer = dev.name,

ProjectName = pro.title,

Company = pro.company,

ManHours = pro.manDays

};

foreach (var detail in ProjectDetails)

{

// use detail.Programmer, detail.Company, detail.ProjectName, detail.ManHours

}

In the above code, the one thing to notice is the use of ‘equals’ rather then the ‘=’ sign. This is different from what we use in regular sql join statement. The example returns a sequence with matching ‘titiles’ from both sequences.

The above example works well for a 1:1 mapping between keys. However, if we wanted information on all ‘projects’ irrespective of any matching ‘developer’ then the above query doesn’t work. In a sql environment, a left join would do the trick since it will return all ‘projects’ and matching ‘developer(s)’. However it will return a ‘null’ for all ‘developers’ which do not have an associated ‘project’. In case of LINQ, the same purpose is surved by the GroupJoin operator.

The GroupJoin operator does not return a single sequence of elements returns a hierarchical sequence of elements. This sequence consists of one element each from the outer sequence. For each element in return, matching elements from the inner sequnce are grouped and attached with it. So it represents a hierarchical grouping of all elements from the outer sequence with each having a child-sequence (grouped together) of matching values from the inner sequence. This operator has the following signature:

public static IEnumerable<TResult> GroupJoin<TOuter, TInner, TKey,

TResult> (

this IEnumerable<TOuter> outer,

IEnumerable<TInner> inner,

Func<TOuter, TKey> outerKeySelector,

Func<TInner, TKey> innerKeySelector,

Func<TOuter, IEnumerable<TInner>, TResult> resultSelector);

public static IEnumerable<TResult> GroupJoin<TOuter, TInner, TKey,

TResult> (

this IEnumerable<TOuter> outer,

IEnumerable<TInner> inner,

Func<TOuter, TKey> outerKeySelector,

Func<TInner, TKey> innerKeySelector,

Func<TOuter, IEnumerable<TInner>, TResult> resultSelector,

IEqualityComparer<TKey> comparer);

In the above overload, the arguments are similar to the one for the Join operator but how it works is interested. When the sequence returned by GroupJoin is iterated, it first enumerates through the inner sequence based on the innerKeySelector and groups them together. Grouping elements are stored in a hash table against they key. Next elements from the outer sequence are enumerated based on the outerKeySelector. For each element, matching elements from the hash table are searched. If found, the sequence from the hash table is associated with the element in the outer sequence. This way we get a parent-child grouping of elements. Listing 6 shows how to use GroupJoin operator (the data sample is from listing 5):

Listing 6

var query = projects.GroupJoin( // outer sequence

developers, // inner sequence

p => p.title, // outer key selector

d => d.projecttitle, // inner key selector

(p, g) => new

{ // result projector

ProjectTitle = p.title,

Programmers = g

});

foreach (var detail in query)

{

// use detail.ProjectTitle

foreach (var programmer in detail.Programmers)

{

// use programmer.name, programmer.projecttitle;

}

}

You must have noticed that we are using nested loops to access the elements. This is further proof that the elements are arranged in a parent-child hierarchy such that for each element in the outer sequence, we have matching elements (grouped together) from the inner sequence. The above example will produce the following resultset where all the ‘projects’ are displayed irrespective of a developer(s) assigned to them:

ImageProcessing

Steven, Markus, Matt

DatabaseFusion

GraphicsLibrary

Shaza

WebArt

Neil

GamingZone

OrderBy..ThenBy / OrderByDescending..ThenByDescending - Ordering Operators

The OrderBy operator is used for ordering the elements in a sequence by one or more keys. It also determines the direction of the order i.e. in an ascending order. The signature of this operator is as following:

public static IOrderedSequence<T> OrderBy<T, K>(

this IEnumerable<T> source,

Func<T, K> keySelector);

public static IOrderedSequence<T> OrderBy<T, K>(

this IEnumerable<T> source,

Func<T, K> keySelector,

IComparer<K> comparer);

In both the overloads, The source is the source sequence on which the operator will operator. The keySelector represents a function that extracts a key of type K from each element of type T from the source sequence. In the second overload, comparer is a custom comparer where we can write custom code to perform the comparison.

You must have noticed that the return type of this operator is IOrderedSequence and not IEnumerable. Before I explain this, let me mention that the OrderBy operator is supported by the ThenBy operator. In a regular sql command, we can order the resultset by any number of fields in addition to the primary field. The same concept is supported by the ThenBy operator. The primary ordering is done by the OrderBy operator followed by the ThenBy operator. The ThenBy operator defines the seconary ordering and can be used n-number of times in a LINQ Query. Both operators together work as following:

source-sequence.OrderBy ().ThenBy ().ThenBy ()…

The above shows that the output of OrderBy is input to the ThenBy operator. Going back to the return type of IOrderedSequence, the ThenBy operator can only be applied to IOrderedSequence and not IEnumerable<T>. For this reason, the return type of OrderBy operator is IOrderedSequence. The example in listing 7 will sort the projects by manHours (OrderBy) and then by title (ThenBy):

Listing 7

List<Project> projects =

new List<Project>

{

new Project { title = "ImageProcessing", company = "Future Vision", manDays = 120 },

new Project { title = "DatabaseFusion", company = "Open Space", manDays = 30 },

new Project { title = "GraphicsLibrary", company = "Kid Zone", manDays = 88 },

new Project { title = "WebArt", company = "Web Ideas", manDays = 57 },

new Project { title = "GamingZone", company = "Play with Us", manDays = 50},

};

IEnumerable<Project> details =

projects.OrderBy (p => p.manDays).ThenBy (p => p.title);

foreach (var project in details)

{

// use project.manDays , project.title , project.company

}

The concept of ordering can also be achieved by using the OrderByDescending and ThenByDescending operators. In this case, as the name implies, the direction of ordering is descending. The signature of OrderByDescending operator is as following with identical arguments to OrderBy operator:

public static OrderedSequence<TSource> OrderByDescending<TSource, TKey>(

this IEnumerable<TSource> source,

Func<TSource, TKey> keySelector);

public static OrderedSequence<TSource> OrderByDescending<TSource, TKey>(

this IEnumerable<TSource> source,

Func<TSource, TKey> keySelector,

IComparer<TKey> comparer);

Distinct / Union - Set Operators

The Distinct operator removes duplicate items from a given sequece. It is just the counterpart of the Distinct keyword used in regular SQL statements. It has the following signature:

public static IEnumerable<TSource> Distinct<TSource>(

this IEnumerable<TSource> source);

public static IEnumerable<TSource> Distinct<TSource>(

this IEnumerable<TSource> source,

IEqualityComparer<TSource> comparer);

In both the overloads, the source is the sequence on which the operator will operator. Using the second overload, we can specify a custom comparer to compare an element. Listing 8 shows the use of the Distinct operator:

Listing 8

public class Fruit

{

public string name { get; set; }

}

List<Fruit> fruits =

new List<Fruit>

{

new Fruit { name = "Orange"},

new Fruit { name = "Orange"},

new Fruit { name = "Orange"},

new Fruit { name = "Apple"},

new Fruit { name = "Apple"},

new Fruit { name = "Pappaya" }

};

var query =

(from fruit in fruits

select new { fruit.name}

).Distinct();

foreach (var fruit in query)

{

// use fruit.name

}

The output of the above query will be following:

Orange

Apple

Papaya

Another of the Set Operators includes the Union operator. A Union operator returns unique elements from two sequences while ignoring the duplicates. The Union operator has the following signature:

public static IEnumerable<TSource> Union<TSource>(

this IEnumerable<TSource> first,

IEnumerable<TSource> second);

public static IEnumerable<TSource> Union<TSource>(

this IEnumerable<TSource> first,

IEnumerable<TSource> second,

IEqualityComparer<TSource> comparer);

In both the overloads, the first and second represents the two sequences on which the Union operator is applied. The third parameter in the second overload is a custom comparer for comparison. Listing 9 shows the use of Union operator:

Listing 9

int[] A = { 1, 2, 3, 4, 5 };

int[] B = { 4, 5, 6, 7, 8};

var union = A.Union (B);

foreach (var n in union)

{

// use n

}

The result of the above query will be 1, 2, 3, 4, 5, 6, 7, 8. It will ignore the duplicates 4 and 5 and yield one element each.

Summary

In this post, we had a look at the basic LINQ Syntax. A LINQ query is also known as a Query Expression. A query expression is a declarative way of writing query where we can perform different operations such as filtering, sorting, grouping etc. The yield of a query expression is a sequence.

Another way of writing a query expression is a Method-based Query which is just a concise way of writing LINQ Query. It makes use of Lambda Expression and Extension Methods. In the background, every query expression is converted to a method-based query. However there is no performance difference between the two and it comes down to the preference of usage.

A query expression makes use of Standard Query Operators. These operators represent an API defined in the System.Linq namespace. They operate on a sequence to perform different functions such as sorting, filtering, projection, grouping and much more.

With this we come to an end of this post. In the next post, you will see the use of LINQ in real world applications. We will begin with LINQ-to-SQL (a provider of LINQ) which is used to query relational databases. So stay tuned for more…

Wednesday, October 7, 2009

Back to Work...

Hi Guys,

I have been away from my blog for some while since my schedule kept me occupied. But now I am back and will carry on writing. As a priority, I intend to complete my LINQ Explained series. So stay tuned for more...

Thursday, September 3, 2009

Some Useful Developer Tools

Scott Hanselman has posted some useful developer and power user tools for windows. I thought of sharing this with you. The post can be found here

Saturday, August 15, 2009

Using Custom Entities in ASP.NET Applications – Part 3

This is the third and final part of my short series on using custom entities in asp.net applications. In this part, we will look at some coding technique commonly used to program around custom entities and data transfer objects. For the sake of brevity, I will not go into the coding details rather focus on the architectural issues. So let’s get started.

Using Custom Entities and DTOs

Many of the sample applications show how to use Custom Entities (and DTOs). In addition to the standard UI, BLL and DAL Layer, the usual design is to have a fourth layer (usually known as Model Layer) consisting of Data Transfer Objects. The DTOs in the model layer is used for transfer of data betweent the three layers. Usually when a user makes a request, it flows from the UI to the BLL and finally to the DAL. In response, the DAL populates one or more DTOs and sends it back to the BLL. The BLL then sends the data back to the UI. Pay close attention to this last statement which is highlighted. To me, this is where we can employ different coding techniques and this decision is left to the developer. Let me explain these techniques in the following sections with examples.

Using DTOs - Only

In this technique, only DTOs are used between all the layers for transfer of data. The BLL returns the same DTOs received from the DAL to the UI. The UI binds to one or more instances of DTOs. Listing 1 shows this technique:

Listing 1

// Model Layer

using System;

public class EmployeeDTO

{

// properties

public int EmployeeID { get; set; }

public string Name { get; set; }

public string Address { get; set; }

public string Designation { get; set; }

public int Age { get; set; }

// constructor

public EmployeeDTO () {}

// constructor

public EmployeeDTO (int employeeId, string name, string address, string designation, int age)

{

this.EmployeeID = employeeId;

this.Name = name;

this.Address = address;

this.Designation = designation;

this.Age = age;

}

}

// Business Logic Layer

using System;

public class Employee

{

public Employee () {}

public IListGetAllEmployees ()

{

return EmployeeDAL.GetAllEmployees ();

}

public EmployeeDTO GetEmployeeById (int employeeId)

{

return EmployeeDAL.GetEmployeeById (employeeId);

}

public int Save (EmployeeDTO employee)

{

return EmployeeDAL.Save (employee);

}

// Validation…

// Business Rules…

}

The Emloyee entity in the BLL has a corresponding data transfer object (EmployeeDTO) in the Model Layer. The Employee entity has different CRUD methods. These methods accept and return one or more instances of type EmployeeDTO. This technique is shown in figure 1.

DTO DTO

UI <----------> BLL <-----------> DAL

Fig 1

There is nothing wrong in using DTOs throughout the application. But to me, this has a few shortcomings. First of all, this technique breaks the basic principle of OO programming, Encapsulation. Encapsulation states that both the state and behavior are held within the object. The object itself is responsible for managing its state and behavior. The state or behavior cannot be separated from the object. In the above code, the Employee class (residing in the BLL) does not define any state. It instead relies on the EmployeeDTO (Model Layer) to handle its state. This shows that the object is not in charge of its state and hence clearly breaks the principle of encapsulation.

Second, custom entities (defined in the BLL) have association with each other. These associations are defined by the help of their properties (primary keys). If they lack any property (as in the above code) then how do we define an association? I don’t see a point in defining associations in the Model Layer between data transfer objects (which lack any behavior as well).

Data Transfer Objects lack behavior yet they are not without advantage. Since they only consist of properties, they are light weight objects used to send data across subsystems and hence help improve the throughput of the system.

Using DTOs in parallel to Custom Entities

In this case, DTOs are limited between the BLL and DAL for transfer of data. However; the UI binds to custom entities instead of DTOs. This is possible since the custom entities define both the state and behavior. Before the UI binds to a custom entity, the custom entity copies over the properties of the DTO. This way, the data is available when the UI binds to a custom entity. Let us see this approach in listing 2:

Listing 2

// Model Layer

using System;

public class EmployeeDTO

{

// properties

public int EmployeeID { get; set; }

public string Name { get; set; }

public string Address { get; set; }

public string Designation { get; set; }

public int Age { get; set; }

// constructor

public EmployeeDTO () {}

// constructor

public EmployeeDTO (int employeeId, string name, string address, string designation, int age)

{

this.EmployeeID = employeeId;

this.Name = name;

this.Address = address;

this.Designation = designation;

this.Age = age;

}

}

// Business Logic Layer

using System;

public class Employee

{

// properties

public int EmployeeID { get; set; }

public string Name { get; set; }

public string Address { get; set; }

public string Designation { get; set; }

public int Age { get; set; }

// constructor

public Employee() {}

// constructor

public Employee (int employeeId, string name, string address, string designation, int age)

{

this.EmployeeID = employeeId;

this.Name = name;

this.Address = address;

this.Designation = designation;

this.Age = age;

}

// ------------------- CRUD Methods -------------------------------

public IListGetAllEmployees ()

{

return ConvertListOfEmployees (EmployeeDAL.GetAllEmployees ()); // convert to list of BO before sending to UI

}

public EmployeeDTO GetEmployeeById (int employeeId)

{

return MapToBO (EmployeeDAL.GetEmployeeById (employeeId)); // convert to BO before sending to UI

}

public int Save (Employee employee)

{

return EmployeeDAL.Save (MapToDTO (employee)); // convert to DTO before sending to UI

}

// ------------------- Converters ---------------------------------

// Convert list of EmployeeDTO to list of Employee

private void ConvertListOfEmployee (IListemployeeList)

{

IListEmployeeCollection;

foreach (EmployeeDTO employee in employeeList)

{

EmployeeCollection.Add (MapToBO (employee));

}

return EmployeeCollection;

}

// Convert an EmployeeDTO to Employee

private Employee MapToBO (EmployeeDTO employee)

{

return new Employee (employee.EmployeeID, Name, Age, Address, Designation);

}

// Convert an Employee to EmployeeDTO

private Employee MapToDTO (Employee employee)

{

return new EmployeeDTO (employee.EmployeeID, Name, Age, Address, Designation);

}

}

As you can see clearly, the above Employee Entity follows the principle of encapsulation. Both the state and behavior are encapsulated within the entity. However, in addition to the CRUD methods, the entity has a set of converters which are used to convert a DTO to a CE and vice versa. In my view, this is the only shortcoming of using this approach which may incur some overhead. But this overhead is minimal and can be ignored easily.

CE DTO

UI <----------> BLL <-----------> DAL

Fig 2

Why not use Custom Entities directly

Curios readers must be thinking “Why not just use custom entities throughout the layers” i.e. In addition to the UI, custom entities can be referenced by DAL as well. This way we don’t need to have an extra layer of data transfer objects. The answer to this is that referencing custom throughout will create a circular reference between the BLL and DAL. A reference to the DAL from the BLL is unidirectional. But if DAL also references the BLL, it results in a bidirectional reference which leads to a circular reference.

Summary

In this part, we saw how to use custom entities in conjunction with data transfer objects. We looked at two different techniques. The first technique uses DTOs for transfer of data through all the layers. This technique works well but does not follow the principle of encapsulation. Also, this leads to the confusion as what is the best place to define the association. Should we define it at the BLL layer or the Model layer?

The second technique uses DTOs only between the BLL and DAL. The UI communicates with the BLL through the custom entities. This approach removes both the problems the first approach, however; it incurs a slight overhead of converting custom entities to DTOs and vice versa.

With this, we come to the end of this series. But this is just not it. I plan to write more on custom entities as this has become the standard coding practice. Please do provide your feedback through your comments. Stay tuned for more...

Monday, August 10, 2009

Using Custom Entities in ASP.NET Applications – Part 2

This is the second part of my short series on using Custom Entities in asp.net applications. In this part we will see how association between custom entities is defined. We will also explore the different types of association between which can exist between custom entities.

Association between Entities

In the database world, relationship is a mandatory feature. Different tables are linked to each other through relationships. Relationships are defined by the help of primary keys. These primary keys are placed as reference (foreign keys) in other tables. In OO programming, this relationship between entities (or classes) is known as association. Each custom entity is uniquely identified by an entity identity or identifier. This entity identity maps to the primary key in the database.

In custom entities, an association is defined by placing the identity of one entity in the other. For example lets assume two entities Book and Author. Since a book always has an author (not to mention how many) we can define this association by placing the author identity in the book entity as show in listing 1:

Listing 1

public class Book

{

private int bookID;

private string title;

// other properties

private int AuthorID; // association

// other constructs

}

Association between Entities

In the following sections, we will look at the different types of association, also known as cardinality between entities. For this purpose, I will use the following entities:

• Customer

• Orders

• Products

• Suppliers

One-to-Many Association

In this type of association, one instance of an entity type is associated with multiple instances of the second type. For example, one Customer can have many Orders. In this case, the Customer is the owner of this association. So the Customer entity specifies the mapping by having a child list object of type Order as shown in listing 3:

Listing 3

public class Customer

{

private int CustomerID;

// other properties

private IListOrderCollection; // 1:M association

}

Many-to-One Association

In this type of association, multiple instances of one entity type are associated with a single instance of another type. For example, many Orders (owner) belong to one Customer. So each Order specifies the mapping by including the identity of the related Customer as shown in listing 4:

Listing 4

public class Order

{

private int OrderID;

// other properties

private int CustomerID; // M:1 association

}

Many-to-Many Association

In a many to many association, multiple instances of one entity type are associated with multiple instances of the other type. For example, many Products have many Suppliers and vice versa. In this case, both entities are the owner and have a child list collection of the related entity as shown in listing 5:

Listing 5

public class Product

{

private int ProductID;

// other properties

private IListSupplierCollection; // M:M association

}

public class Supplier

{

private int SupplierID;

// other properties

private IListProductCollection; // M:M association

}

One-to-One Association

One to One association is not very common and exists in special cases. For example, if a database table is very large and most of the fields are rarely used then the database designer tends to separate out these fields in a different table to improve performance. But both the tables are still linked through the same identity. In custom entities, such association is presented by a single entity which has all the fields.

Using an Identifier or a Full-Blown Object

One question commonly asked on different forums is that when defining an association, should we use the entity identity (a primitive data type) or a full blown object of the entity being referenced. In other words, listing 1 can be rewritten as following:

Listing 6

public class Book

{

private int bookID;

private string title;

// other properties

private Author author; // association – Full Blown Object

// other constructs

}

Watch closely, in the above listing, we are not using the simple AuthorID of type integer to define the mapping. Rather we have used a child object of type ‘Author’. This is one approach taken by developers. To me, the answer to the above question depends on different factors including the technology being used plus the user requirements. Let me explain both these scenarios in details.

First, ASP.NET comes with many built in controls which make data binding a breeze. These controls including the ObjectDataSource, GridView, ListView etc are capable of binding to custom entities as well. However, these controls can only bind to properties of primitive types (int, string, bit etc). These controls cannot bind to complex child object or collection. To me, this is a short coming in the framework and hopefully will be removed in a future version. Although there are workaround, but they do not offer a concrete solution. So if the custom entity contains a reference of simple type, it can easily bind to a data control.

Second, the user requirement also plays an important role. Suppose we are creating an application for a retail outlet. A Customer makes an Order for different purchases. This customer may already exist in the system. Now if we have modeled our Order class to have a full blown Custom child object, we can easily create both the Order and the Customer object. Why because we have full access to the Customer object through the Order object. This way when the Order is passed down to the DAL, the entire Customer object is passed along and saved to the database. On the other hand, if the Order only had a reference to Customer identity, we first had to create a new Customer and then assign his identity to the Order object. This would be more like a two step process.

In the end, to me it remains a user preference whether to use a reference of simple or custom type. Different factors play in and have to be considered before preference is locked down.

Light-Weight Model and Association

When it comes to architecture, different people have different opinions (and they are justified as well). When defining association between entities, it tends to get very complex and difficult to handle. Custom Entities may have a deep hierarchy of association. For example, a Product is composed of Components. Each Component has many Parts and each Part may be made up of Sub-Parts. With such hierarchy, many issues have to be considered. For example, if we make changes at any level or the hierarchy, the entire hierarchy has to be persisted. Similarly, if we are making changes at any level and the parent hierarchy leaves the transaction, all the properties have to be restored back to the original state. There are numerous scenarios with such deep hierarchy.

An alternative to deal with such associations is by defining a light-weight model. This means that there is no need to have a child object with the parent object. We can instead use method (or more specifically services) to fetch the child data. This is more like a Service-Oriented Architecture where we tend to rely on services. The concept is to simply fetch the data when required.

For example, using the above approach, we can rewrite listing 1 as following:

Listing 7

public class Book

{

private int bookID;

private string title;

// other properties

public Author GetBookAuthor ()

{

//return GetBookAuthor (this.bookID)

}

// other constructs

}

In the above listing, an Author object is returned using the method GetBookAuthor. This eliminates the need to reference the Author object within the Book class. I am sure you can see the advantages of using this approach. However, my opinion is not final.

Summary

In this post, we saw the how association is defined between entities. We also looked at the different types of associations also known as ‘cardinality’. We also looked at the different scenarios which lead to deciding whether to use a reference of primitive type or a full blown object. In the next post, we will see how to code around custom entities. Stay tuned for more…

Friday, August 7, 2009

Using Custom Entities in ASP.NET Applications – Part 1

Recently there has been a surge of architecture-related discussion in the .NET community. This stems from the fact that business applications are getting complex and intricate to build. Building N-Tier business applications is both a requirement and a challenging task. This cannot be achieved by just using a specific framework such as the .NET Framework. The .NET Framework does provide a rich library for building enterprise business applications but this is half the story. Sound Object-Oriented principles supported by architectural patterns apply across all development platforms without targeting a specific technolgy. This combination of technology, OO principle and architecture results in applications which are scalable, fault tolerant and user interactive.

In this short series, I will talk about using Business Entities in ASP.NET applications. The reason behind this series to present my two cents on most of the question asked on the forums regarding developing N-Tier applications using Custom Entities. This discussion is not intended to explain OO programming. The focus is to discuss architecture issues when using Custom Entities. I will start with simple concepts and then extend it from there.

What’s wrong with DataSet

Honestly speaking, DataSets are not evil at all and I prefer to use them whenever I can. It’s easy to program around a DataSet and IMHO, works great for small to mid sized applications. If you really fine tune the data layer accessing the database, a DataSet gives optimal performance. In addition to this, many of the data controls available in asp.net including ObjectDataSource, GridView, Datalist etc work really well with DataSets. However, a DataSet has its limitations and is not suited for every situation especially when used for Enterprise Applications. The most commonly faced problems include:

• They are not strongly-typed

• They lack validation and business rules.

• They do not support OO principles such as Encapsulation, Inheritance etc

• DataSet have a performance issue when used for large amount of data (see this)

• DataSets are not interoperable with applications written in other languages such as Java, PHP etc

• The use of DataSet dictates the Data-First design whereas OO principles focuses on Domain-First design

• Maintenance becomes difficult when an application starts growing with time (which every application does)

The alternative is to use custom entities discussed in the following section.

Using Custom Entities

Seasoned programmers tend to use classes for developing business applications. Classes allow the developers to take advantage of a rich OO programming model. This model comes with a set of well defined OO principles including Data Abstraction, Information Hiding, Encapsulation, Inheritance etc.

In its essence, a custom entity is just a regular class. But what makes it different is that it is a domain object. In business applications, domain objects identify the key entities within the system. Each domain object can be represented by a Custom Entity.

In addition to supporting OO principles, a custom entity defines business rules and custom validation. This subtle addition is the real difference between a regular class and a custom entity. For example, let’s look at the following Book custom entity:

public class Book

{

// properties

public int BookId { get; set; }

public string ISBN { get; set; }

public string Title { get; set; }

public string Author { get; set; }

// constructors

public Book () {}

public Book (string isbn, string title, string author)

{

// set values

}

// simple method

public int BuyBook (string ISBN)

{

// process

}

// validation rule

public book Validate (Book book)

{

if (book.ISBN == null)

return false;

// other rules

}

// business rule

public int isPremiumMember (int customerId)

{

// if customer is a premium member

// eligible for gift voucher

// return voucherNumber

}

}

The above entity defines the normal properties, constructors and a method (BuyBook). In addition to these constructs, the class also defines a validation method (Validate) and a business rule (isPremiumMember). The Validate method validates the state of the object while isPremiumMember method determines if the customer is eligible for a gift voucher given he is a premium member. This is just a trivial example. True custom entities can have a complex set of business rules.

Different terms including Business Object, Business Entity and Custom Business Entity are used interchangeably to represent a Custom entity. Though the purists may differentiate between them but IMHO, they all are the same. It’s just a preference of term used by different users.

Data Transfer Objects

Data Transfer Object (DTO) is a design pattern used to transfer data between software application subsystems. It is basically a data container. The main difference between a DTO and a Custom Entity is that a DTO does not have any behavior or responsibility and only consists of properties. A DTO is also known as a dumb object due to the lack of any behavior. Using a DTO has several advantages including:

• All data is summed up in one DTO and passed down to a layer

• Since an entire object is passed down the wire, adding or removing properties to the object does not effect the calls

• DTOs are light weight objects which give better as compared to DataSets

Keeping above in mind, the book BO in the above section can have the following corresponding Book DTO:

public class BookDTO

{

public int BookId { get; set; }

public string ISBN { get; set; }

public string Title { get; set; }

public string Author { get; set; }

public Book () {}

public Book (string isbn, string title, string author)

{

// set values

}

}

Using Custom Entities and DTOs

A typical 3-tier (logical) application has three layers namely User Interface Layer (UI), Business Logic Layer (BLL) and the Data Access Layer (DAL). The UI layer provides an interface to the user to interact with the system. The BLL (application layer, middle layer) is where all the business logic resides and the DAL deals with operations of the data source. The UI never interacts with the DAL directly. When a user makes a request from the UI, the request is directed to the BLL. The BLL then forwards the request to the DAL. The typical flow of a user request is as following:

UI <-> BLL <-> DAL

The question is how the data transfers between the layers? This is where the DTOs come into play. When the user request reaches the DAL, the DAL fetches the data from the data source and bundles it into a DTO. The DTO is then returned to the BLL followed by the UI. Similarly when a user creates or updates a record, the entire DTO is transferred back to the BLL and then to the DAL.

The update and delete process can be handled by just using the primary key value.

Is a DTO a Custom Entity

This is an interesting question. I have seen many applications where both the terms are used interchangeably. There is no harm in doing so but I tend to disagree. A DTO is really a design pattern used to transfer data between subsystems. Although it a class but keep in mind that a DTO does not define any behavior, business rules, validation or relationship. In the following posts, I will show you how a DTO is used both as a custom entity and individually.

Summary

In this post, I just gave you an overview of what Custom entities are and tried to understand the basics of using Custom Entities when developing business applications. In the following post, we will dive deeper and explore more about custom entities.

Thursday, July 9, 2009

Getting Started with WCF Service

In this post, I will walk you through creating your first Windows Communication Foundation Service. Those new to WCF should read my previous post to understand the basics concepts of WCF Service.

Introduction

Before a WCF Service can be consumed by a client application, the following three steps are required:

Service: The actual WCF Service which provides business functionality

Host: A hosting environment which hosts the service

Client: A client application/service which invokes the WCF Service

In the following sections, we will create a solution which consists of three separate projects representing the service, host and the client respectively. I will be using Visual Studio 2008 to create the application.

Getting Started

1. Start a new instance of Visual Studio 2008.

2. Click on File -> New Project. From Project types pane, select Visual Studio Solutions

3. From the Template pane, select Blank Solution

4. Enter WCFIntroduction for Project Name. Also specify the location according to you working preference

This will create a blank solution as shown in figure 1. Let us now create the actual WCF Service.

Fig 1

Creating the Service

1. In the solution explorer, right click on ‘WCFIntroduction’ and select Add -> New Project.

2. Select Class Library template and name it as WCFCalculator. Make sure that the location is set to ..\WCFIntroduction as shown in figure 2. Click OK to create the project.

Fig 2

3. The newly created project contains a single file ‘Class1.cs’. Rename it to ‘WCFCalculatorService.cs’. We will return to this file shortly.

4. From the solution explorer, right click on ‘WCFCalculator’ project and select ‘Add Reference’. The ‘Add Reference’ dialog appears. From the .NET tab, select ‘System.ServiceModel’ and click OK. A reference to the respective namespace is added which is required to work with WCF Services

5. Again right click on ‘WCFCalculator’ project and select Add -> New Item. Select ‘Class’ template, name it as ‘IWCFCalculatorService.cs’ as shown in figure 3 and click Add

6. This will create a new class file ready to be coded

Fig 3

7. Change the class definition to ‘public interface IWCFCalculatorService’. Type in the code as shown in listing 1 and save the file

Listing 1

8. Let us now return to the ‘WCFCalculatorService.cs’ file. Open it and type in the following code:

Listing 2

Compile this project to make sure there are no errors. We have just created a very basic WCF Service used to add two numbers. The next step is to host this service in a hosting environment. In the following section, we will create a windows console application which will host this service.

Hosting the Service

1. Right click on the solution ‘WCFIntroduction’ and select Add -> New Project.

2. Select ‘Console Application’ template, name it as ‘Host’ as shown in figure 4 and click OK

Fig 4

3. Right click on ‘Host’ project and select Add Reference. From the .NET tab, select System.ServiceModel. From the Projects tab, select ‘WCFCalculator’ and press OK

4. The Host project consists of one file ‘Program.cs’. Open this file and type in the code as shown in listing 3

Listing 3

5. Compile this project. Make sure that the Host project is set as Startup Project (You can do so by right clicking on Host project and select ‘Set as Startup Project’). Press Ctrl+F5 to run this project. You should see a command window with message ‘Press to terminate service...’ as shown in figure 5. This means the service is up and runninig. Keep this window open (service is active). Next we will create a client application which will interact with this service. Let us return to our solution.

Fig 5

Consuming the Service – Client Application

1. Right click on solution ‘WCFIntroduction’ and select Add -> New Project

2. Select ‘Console Application’ template, name it as Client and click OK as shown in figure 6

Fig 6

3. Add a reference to System.ServiceModel namespace (as done in the above steps)

4. This project consists of a single file ‘Program.cs’. Open this file and type in the code as shown in listing 4

Listing 4

5. Here is the tricky part. In the above code, a proxy is created using the Service Contract (IWCFCalculatorService). To use this interface, copy it over from the ‘WCFCalculator’ project to the ‘Client’ project. Don’t forget to change the namespace to ‘Client’ in this file (In short the same interface now exists in both the ‘WCFCalculator’ and ‘Client’ project).

6. Compile the ‘Client’ project and make it the Startup Project (Right click on ‘Client’ project and select ‘Set as Startup Project’). Press Ctrl+F5 and you should see the output as shown in figure 7

Fig 7

Wow, you just create a WCF service from scratch and got it working. Though it may not be simple, I am sure it’s worth a try.

How it Works

Let me explain some of the concepts discussed in the above sections. If you have read my previous post, many of the details should be self explanatory. In listing 1, the IWCFCalculatorService interface is the Service Contract since it is decorated with the [ServiceContract] attribute. The single method within this interface - AddNumbers – is a WCF method as its decorated with the [OperationContract] attribute. Since IWCFCalculatorService is a service contract, any class implementing this interface becomes a WCF Service. This is also true for the WCFCalculatorService class as shown in listing 2.

In listing 3, an instance of ServiceHost class is created. This class provides a host for a WCF Service. It accepts two parameters, Type and params respectively. The Type parameter specifies the type (WCFCalculator.WCFCalculatorService) of the service while params specifies a collection of addresses (http://localhost:8000/WCFCalculator) where the service can be hosted. Next the ServiceHost defines a new Endpoint by calling the AddServiceEndPoint (). This method accepts two parameters including a contract (WCFCalculator.IWCFCalculatorService) and the type of binding (basicHttp) used by the Endpoint. Once an endpoint is defined, the service is open and available to accept requests from the client.

For a client to communicate with the service, a proxy is required. In listing 4, the generic ChannelFactory service model type generates the proxy and the related channel stack. This factory uses an instance of the EndpointAddress class which provides a unique network address (which points to the service endpoint) used by the client to communicate with the service. The factory creates a service of type IWCFCalculatorService. Later the generated proxy is used to invoke the required funcationlity.

Summary

In this post, I walked you through creating your first WCF Service. You may find it a bit difficult in the beginning but as you gain experience, WCF is a great tool to work with. In future post, I will talk more about WCF. So stay tuned for more…

Introduction

Before a WCF Service can be consumed by a client application, the following three steps are required:

Service: The actual WCF Service which provides business functionality

Host: A hosting environment which hosts the service

Client: A client application/service which invokes the WCF Service

In the following sections, we will create a solution which consists of three separate projects representing the service, host and the client respectively. I will be using Visual Studio 2008 to create the application.

Getting Started

1. Start a new instance of Visual Studio 2008.

2. Click on File -> New Project. From Project types pane, select Visual Studio Solutions

3. From the Template pane, select Blank Solution

4. Enter WCFIntroduction for Project Name. Also specify the location according to you working preference

This will create a blank solution as shown in figure 1. Let us now create the actual WCF Service.

Fig 1

Creating the Service

1. In the solution explorer, right click on ‘WCFIntroduction’ and select Add -> New Project.

2. Select Class Library template and name it as WCFCalculator. Make sure that the location is set to ..\WCFIntroduction as shown in figure 2. Click OK to create the project.

Fig 2

3. The newly created project contains a single file ‘Class1.cs’. Rename it to ‘WCFCalculatorService.cs’. We will return to this file shortly.

4. From the solution explorer, right click on ‘WCFCalculator’ project and select ‘Add Reference’. The ‘Add Reference’ dialog appears. From the .NET tab, select ‘System.ServiceModel’ and click OK. A reference to the respective namespace is added which is required to work with WCF Services

5. Again right click on ‘WCFCalculator’ project and select Add -> New Item. Select ‘Class’ template, name it as ‘IWCFCalculatorService.cs’ as shown in figure 3 and click Add

6. This will create a new class file ready to be coded

Fig 3

7. Change the class definition to ‘public interface IWCFCalculatorService’. Type in the code as shown in listing 1 and save the file

Listing 1

using System;

using System.ServiceModel;

namespace WCFCalculator

{

[ServiceContract(Namespace = "http://youruniquedomain")]

public interface IWCFCalculatorService

{

[OperationContract]

int AddNumbers (int x, int y);

}

}

8. Let us now return to the ‘WCFCalculatorService.cs’ file. Open it and type in the following code:

Listing 2

using System;

using System.ServiceModel;

namespace WCFCalculator

{

public class WCFCalculatorService : IWCFCalculatorService

{

public int AddNumbers (int x, int y)

{

return x + y;

}

}

}

Compile this project to make sure there are no errors. We have just created a very basic WCF Service used to add two numbers. The next step is to host this service in a hosting environment. In the following section, we will create a windows console application which will host this service.

Hosting the Service

1. Right click on the solution ‘WCFIntroduction’ and select Add -> New Project.

2. Select ‘Console Application’ template, name it as ‘Host’ as shown in figure 4 and click OK

Fig 4

3. Right click on ‘Host’ project and select Add Reference. From the .NET tab, select System.ServiceModel. From the Projects tab, select ‘WCFCalculator’ and press OK

4. The Host project consists of one file ‘Program.cs’. Open this file and type in the code as shown in listing 3

Listing 3

using System;

using System.ServiceModel;

namespace Host

{

class Program

{

static void Main (string[] args)

{

using (ServiceHost host = new ServiceHost (typeof (WCFCalculator.WCFCalculatorService), new Uri ("http://localhost:8000/WCFCalculator")))

{

host.AddServiceEndpoint (typeof (WCFCalculator.IWCFCalculatorService), new BasicHttpBinding (), "WCFCalculatorService");

host.Open ();

Console.WriteLine ("Pressto terminate service...");

Console.ReadLine ();

}

}

}

}

5. Compile this project. Make sure that the Host project is set as Startup Project (You can do so by right clicking on Host project and select ‘Set as Startup Project’). Press Ctrl+F5 to run this project. You should see a command window with message ‘Press

Fig 5

Consuming the Service – Client Application

1. Right click on solution ‘WCFIntroduction’ and select Add -> New Project

2. Select ‘Console Application’ template, name it as Client and click OK as shown in figure 6

Fig 6

3. Add a reference to System.ServiceModel namespace (as done in the above steps)

4. This project consists of a single file ‘Program.cs’. Open this file and type in the code as shown in listing 4

Listing 4

using System;

using System.ServiceModel;

namespace Client

{

class Program

{

static void Main (string[] args)

{

EndpointAddress endPoint;

IWCFCalculatorService proxy;

int result;

endPoint = new EndpointAddress ("http://localhost:8000/WCFCalculator/WCFCalculatorService");

proxy = ChannelFactory.CreateChannel (new BasicHttpBinding (), endPoint);

result = proxy.AddNumbers (2, 3);

Console.WriteLine ("2 + 3 = " + result.ToString ());

Console.WriteLine ("Pressto terminate client...");

Console.ReadLine ();

}

}

}

5. Here is the tricky part. In the above code, a proxy is created using the Service Contract (IWCFCalculatorService). To use this interface, copy it over from the ‘WCFCalculator’ project to the ‘Client’ project. Don’t forget to change the namespace to ‘Client’ in this file (In short the same interface now exists in both the ‘WCFCalculator’ and ‘Client’ project).

6. Compile the ‘Client’ project and make it the Startup Project (Right click on ‘Client’ project and select ‘Set as Startup Project’). Press Ctrl+F5 and you should see the output as shown in figure 7

Fig 7

Wow, you just create a WCF service from scratch and got it working. Though it may not be simple, I am sure it’s worth a try.

How it Works

Let me explain some of the concepts discussed in the above sections. If you have read my previous post, many of the details should be self explanatory. In listing 1, the IWCFCalculatorService interface is the Service Contract since it is decorated with the [ServiceContract] attribute. The single method within this interface - AddNumbers – is a WCF method as its decorated with the [OperationContract] attribute. Since IWCFCalculatorService is a service contract, any class implementing this interface becomes a WCF Service. This is also true for the WCFCalculatorService class as shown in listing 2.

In listing 3, an instance of ServiceHost class is created. This class provides a host for a WCF Service. It accepts two parameters, Type and params respectively. The Type parameter specifies the type (WCFCalculator.WCFCalculatorService) of the service while params specifies a collection of addresses (http://localhost:8000/WCFCalculator) where the service can be hosted. Next the ServiceHost defines a new Endpoint by calling the AddServiceEndPoint (). This method accepts two parameters including a contract (WCFCalculator.IWCFCalculatorService) and the type of binding (basicHttp) used by the Endpoint. Once an endpoint is defined, the service is open and available to accept requests from the client.